[ad_1]

Each startup’s journey is exclusive, and the street to success isn’t

linear, however value is a story in each enterprise at each cut-off date,

particularly throughout financial downturns. In a startup, the dialog round

value shifts when transferring from the experimental and gaining traction

phases to excessive development and optimizing phases. Within the first two phases, a

startup must function lean and quick to return to a product-market match, however

within the later levels the significance of operational effectivity ultimately

grows.

Shifting the corporate’s mindset into attaining and sustaining value

effectivity is absolutely tough. For startup engineers that thrive

on constructing one thing new, value optimization is often not an thrilling

subject. For these causes, value effectivity typically turns into a bottleneck for

startups sooner or later of their journey, identical to accumulation of technical

debt.

How did you get into the bottleneck?

Within the early experimental part of startups, when funding is proscribed,

whether or not bootstrapped by founders or supported by seed funding, startups

usually give attention to getting market traction earlier than they run out of their

monetary runway. Groups will decide options that get the product to market

rapidly so the corporate can generate income, preserve customers pleased, and

outperform opponents.

In these phases, value inefficiency is an appropriate trade-off.

Engineers could select to go together with fast customized code as a substitute of coping with

the trouble of establishing a contract with a SaaS supplier. They might

deprioritize cleanups of infrastructure parts which can be now not

wanted, or not tag sources because the group is 20-people robust and

everybody is aware of the whole lot. Attending to market rapidly is paramount – after

all, the startup won’t be there tomorrow if product-market match stays

elusive.

After seeing some success with the product and reaching a speedy development

part, these earlier choices can come again to harm the corporate. With

site visitors spiking, cloud prices surge past anticipated ranges. Managers

know the corporate’s cloud prices are excessive, however they could have bother

pinpointing the trigger and guiding their groups to get out of the

scenario.

At this level, prices are beginning to be a bottleneck for the enterprise.

The CFO is noticing, and the engineering workforce is getting plenty of

scrutiny. On the identical time, in preparation for an additional funding spherical, the

firm would wish to indicate cheap COGS (Value of Items Bought).

Not one of the early choices have been flawed. Creating a wonderfully scalable

and value environment friendly product just isn’t the appropriate precedence when market traction

for the product is unknown. The query at this level, when value begins

turning into an issue, is the way to begin to scale back prices and change the

firm tradition to maintain the improved operational value effectivity. These

adjustments will make sure the continued development of the startup.

Indicators you might be approaching a scaling bottleneck

Lack of value visibility and attribution

When an organization makes use of a number of service suppliers (cloud, SaaS,

growth instruments, and so forth.), the utilization and value information of those providers

lives in disparate programs. Making sense of the full know-how value

for a service, product, or workforce requires pulling this information from numerous

sources and linking the price to their product or function set.

These value stories (corresponding to cloud billing stories) might be

overwhelming. Consolidating and making them simply comprehensible is

fairly an effort. With out correct cloud infrastructure tagging

conventions, it’s unattainable to correctly attribute prices to particular

aggregates on the service or workforce stage. Nevertheless, except this stage of

accounting readability is enabled, groups might be compelled to function with out

totally understanding the price implications of their choices.

Value not a consideration in engineering options

Engineers think about numerous elements when making engineering choices

– practical and non-functional necessities (efficiency, scalability

and safety and so forth). Value, nevertheless, just isn’t all the time thought of. A part of the

cause, as lined above, is that growth groups typically lack

visibility on value. In some instances, whereas they’ve an inexpensive stage of

visibility on the price of their a part of the tech panorama, value could not

be perceived as a key consideration, or could also be seen as one other workforce’s

concern.

Indicators of this downside is perhaps the dearth of value issues

talked about in design paperwork / RFCs / ADRs, or whether or not an engineering

supervisor can present how the price of their merchandise will change with scale.

Homegrown non-differentiating capabilities

Corporations typically keep customized instruments which have main overlaps in

capabilities with third-party instruments, whether or not open-source or industrial.

This will likely have occurred as a result of the customized instruments predate these

third-party options – for instance, customized container orchestration

instruments earlier than Kubernetes got here alongside. It may even have grown from an

early preliminary shortcut to implement a subset of functionality supplied by

mature exterior instruments. Over time, particular person choices to incrementally

construct on that early shortcut lead the workforce previous the tipping level that

may need led to using an exterior software.

Over the long run, the full value of possession of such homegrown

programs can grow to be prohibitive. Homegrown programs are usually very

straightforward to begin and fairly tough to grasp.

Overlapping capabilities in a number of instruments / software explosion

Having a number of instruments with the identical objective – or no less than overlapping

functions, e.g. a number of CI/CD pipeline instruments or API observability instruments,

can naturally create value inefficiencies. This typically comes about when

there isn’t a paved

street,

and every workforce is autonomously choosing their technical stack, moderately than

selecting instruments which can be already licensed or most well-liked by the corporate.

Inefficient contract construction for managed providers

Selecting managed providers for non-differentiating capabilities, such

as SMS/e-mail, observability, funds, or authorization can tremendously

help a startup’s pursuit to get their product to market rapidly and

preserve operational complexity in verify.

Managed service suppliers typically present compelling – low-cost or free –

starter plans for his or her providers. These pricing fashions, nevertheless, can get

costly extra rapidly than anticipated. Low cost starter plans apart, the

pricing mannequin negotiated initially could not swimsuit the startup’s present or

projected utilization. One thing that labored for a small group with few

clients and engineers would possibly grow to be too costly when it grows to 5x

or 10x these numbers. An escalating development in the price of a managed

service per consumer (be it staff or clients) as the corporate achieves

scaling milestones is an indication of a rising inefficiency.

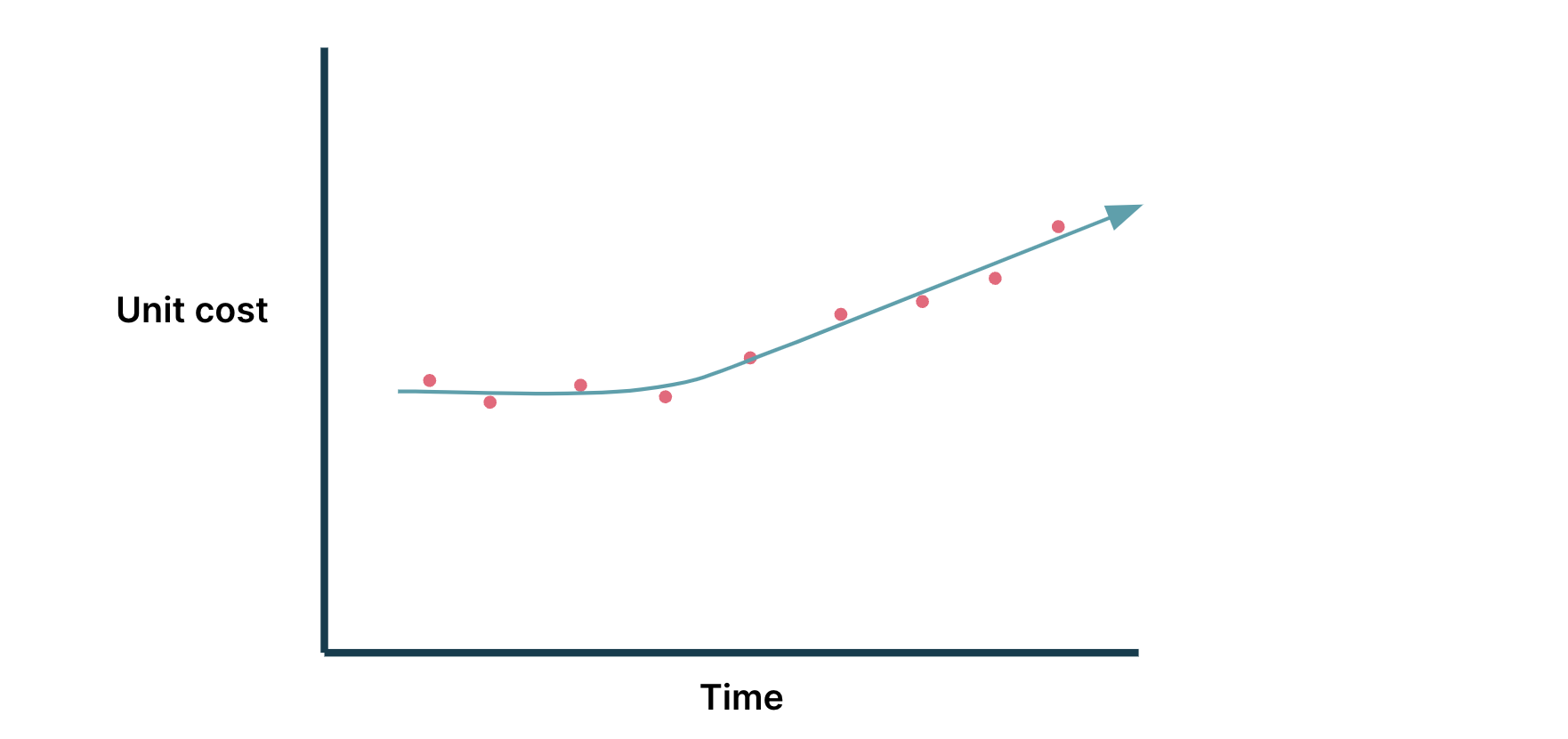

Unable to achieve economies of scale

In any structure, the price is correlated to the variety of

requests, transactions, customers utilizing the product, or a mixture of

them. Because the product positive factors market traction and matures, corporations hope

to achieve economies of scale, lowering the common value to serve every consumer

or request (unit

value)

as its consumer base and site visitors grows. If an organization is having bother

attaining economies of scale, its unit value would as a substitute improve.

Determine 1: Not reaching economies of scale: growing unit value

Be aware: on this instance diagram, it’s implied that there are extra

models (requests, transactions, customers as time progresses)

How do you get out of the bottleneck?

A standard situation for our workforce after we optimize a scaleup, is that

the corporate has seen the bottleneck both by monitoring the indicators

talked about above, or it’s simply plain apparent (the deliberate funds was

fully blown). This triggers an initiative to enhance value

effectivity. Our workforce likes to arrange the initiative round two phases,

a scale back and a maintain part.

The scale back part is targeted on brief time period wins – “stopping the

bleeding”. To do that, we have to create a multi-disciplined value

optimization workforce. There could also be some thought of what’s attainable to

optimize, however it’s essential to dig deeper to essentially perceive. After

the preliminary alternative evaluation, the workforce defines the method,

prioritizes based mostly on the affect and energy, after which optimizes.

After the short-term positive factors within the scale back part, a correctly executed

maintain part is vital to take care of optimized value ranges in order that

the startup doesn’t have this downside once more sooner or later. To help

this, the corporate’s working mannequin and practices are tailored to enhance

accountability and possession round value, in order that product and platform

groups have the required instruments and data to proceed

optimizing.

As an instance the scale back and maintain phased method, we’ll

describe a current value optimization enterprise.

Case examine: Databricks value optimization

A shopper of ours reached out as their prices have been growing

greater than they anticipated. That they had already recognized Databricks prices as

a prime value driver for them and requested that we assist optimize the price

of their information infrastructure. Urgency was excessive – the growing value was

beginning to eat into their different funds classes and rising

nonetheless.

After preliminary evaluation, we rapidly shaped our value optimization workforce

and charged them with a purpose of lowering value by ~25% relative to the

chosen baseline.

The “Scale back” part

With Databricks as the main target space, we enumerated all of the methods we

may affect and handle prices. At a excessive stage, Databricks value

consists of digital machine value paid to the cloud supplier for the

underlying compute functionality and value paid to Databricks (Databricks

Unit value / DBU).

Every of those value classes has its personal levers – for instance, DBU

value can change relying on cluster kind (ephemeral job clusters are

cheaper), buy commitments (Databricks Commit Items / DBCUs), or

optimizing the runtime of the workload that runs on it.

As we have been tasked to “save value yesterday”, we went in the hunt for

fast wins. We prioritized these levers in opposition to their potential affect

on value and their effort stage. Because the transformation logic within the

information pipelines are owned by respective product groups and our working

group didn’t have deal with on them, infrastructure-level adjustments

corresponding to cluster rightsizing, utilizing ephemeral clusters the place

applicable, and experimenting with Photon

runtime

had decrease effort estimates in comparison with optimization of the

transformation logic.

We began executing on the low-hanging fruits, collaborating with

the respective product groups. As we progressed, we monitored the price

affect of our actions each 2 weeks to see if our value affect

projections have been holding up, or if we wanted to regulate our priorities.

The financial savings added up. Just a few months in, we exceeded our purpose of ~25%

value financial savings month-to-month in opposition to the chosen baseline.

The “Maintain” part

Nevertheless, we didn’t need value financial savings in areas we had optimized to

creep again up after we turned our consideration to different areas nonetheless to be

optimized. The tactical steps we took had decreased value, however sustaining

the decrease spending required continued consideration as a result of an actual danger –

each engineer was a Databricks workspace administrator able to

creating clusters with any configuration they select, and groups have been

not monitoring how a lot their workspaces value. They weren’t held

accountable for these prices both.

To handle this, we got down to do two issues: tighten entry

management and enhance value consciousness and accountability.

To tighten entry management, we restricted administrative entry to simply

the individuals who wanted it. We additionally used Databricks cluster insurance policies to

restrict the cluster configuration choices engineers can decide – we wished

to realize a stability between permitting engineers to make adjustments to

their clusters and limiting their selections to a wise set of

choices. This allowed us to attenuate overprovisioning and management

prices.

To enhance value consciousness and accountability, we configured funds

alerts to be despatched out to the homeowners of respective workspaces if a

explicit month’s value exceeds the predetermined threshold for that

workspace.

Each phases have been key to reaching and sustaining our goals. The

financial savings we achieved within the decreased part stayed secure for plenty of

months, save for fully new workloads.

[ad_2]